Introduction

Here is a not so short note (Rolling Update) for machine learning and artificial intelligience. Main concepts and some references are presented. This note is based on Wikipedia, Andrew Ng’s Lecture Notes CS229 of Standford, Machine Learning course of SJTU IEEE-yangyang, and Machine Learning Crash Course of Google.

In this note, let’s discuss about the support vector machine (SVM). Reference is added: the course of CMU (http://www.cs.cmu.edu/~guestrin/Class/10701-S07/Slides/

Linear classifier

A classification decision is made by the linear classifier based on the value of a linear combination of the characteristics (features), which is typically presented to the machine by a feature vector.

- Input:

- Output:

is such a function, that maps the values above a threshold to the first class and other values to the second class, or give the probability. is a vector of weights, which can be learned from a set of labeled samples. There are two classes of methods for determining the parameters of :

- Generative model: a model of the joint probability distribution on

- Linear discriminant analysis (LDA)

- Naive Bayes classifier

- Hidden Markov model

- discriminative model: a model of the conditional probability of with an observation as

- Logistic regression: maximum likelihood estimation of

- Perceptron: an algorithm that attempts to fix all errors encountered in the training set

- SVM: maximizes the margin between the decision hyperplane and the examples in the training set.

- Neural networks

Generative algorithms try to learn which can be transformed into later to classify the data. Discriminative algorithms try to learn directly from the data and then classify data, does not care how the data was generated (). Here is the ralation:

Generative algorithms are suitable for unsupervised learning. Discriminative models usually proceeds in a supervised way.

Maximum margin classifier

In the case of support vector machines, a data point is viewed as a -dimensional vector (a list of numbers). The points was supposed to separated by a dimensional hyperplane. Many hyperplanes exists. The best choice is the one that represents the largest margin between the two classes, which is known as the maximum-margin hyperplane. This classifier is also called as a maximum margin classifier.

The equation of a hyperplane can be written as:

Note that .

Two classes can be determined by:

- leads to

- leads to

The problem is how to find the optimum hyperplane with a maximum margin.

Functional margin

The functional margin is defined as:

The result would be positive for properly classified points and negative otherwise. Hence, a large functional margin represents a confident and a correct prediction. The functional margin has a problem. For example, the two equations and represent the same hyper plane, but the functional margins differs.

Geometrical margin

The geometrical margin is defined as:

where is the normal vector of the hyper plane. Here is the Euclidean norm (2-norm), gives the ordinary distance from the origin to the point .

The margin in maximum margin classifier refers to the geometrical margin. Samples on the margin are called the support vectors.

If the training data is linearly separable, two parallel hyperplanes can be selected to separate the two classes of data so that the distance between them (called margin) is as large as possible. After normalized or standardized, these hyperplanes are:

- label 1:

- label -1:

The geometrical margin between these two hyperplanes is . The optimization problem is to minimize s.t. (subject to) for each in data. This optimization is called the constrained optimization with the ‘s.t.’. It can be solved efficiently by quadratic programming (). The max-margin hyperplane is completely determined by those which lie nearest to the hyperplane. These are called support vectors. Moving other points a little does not affect the decision boundary. To predict the labels of new points, only the support vectors are necessary. We use for matematical purpose when derivating the Lagrangian function to optimize it.

Soft-margin for not linearly separable data

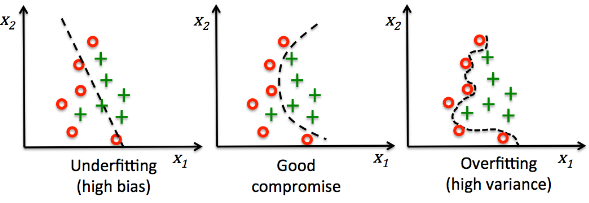

It could be very complicated or over-fitting to classify all samples correctly in practical. For the soft-margin SVM, errors in classification are allowed, which is more common. The criteria now is to minimize the number and the value of noises. E.g. A large margin causes lots of misclassified samples. A smaller margin can reduce the number of errors, but each error becomes bigger.

The optimization problem is to minimize s.t. and for each in data. is pronounced as /ksi/, called “slack” variables, pay linear penalty.

- means the classification of sample is correct. The larger is, the bigger mistake the classification makes.

- means sample is in the margin, resulting to margin violation.

- stands for sample is misclassified.

is the tradeoff parameter, chosen by cross-validation. A large means we will get a small margin width and reduce the number of errors as much as possible. Contrarily, a small means the margin width can be large even though we get more errors. The product is the penalty for misclassifying. If , the soft-margin is recovered to the hard-margin SVM.

is defined as:

With the regularization and introducing the Hinge loss:

the optimization problem is to minimize:

It belongs to the quadratic programming () with a convex function .

A convex function is the line segment between any two points on the graph lies above or on it, e.g., . For a twice differentiable function of a single variable, if the second derivative is always greater than or equal to zero for its entire domain then the function is convex. The locally optimal point of a convex problem is globally optimal.

Lagrangian Duality

The standard form convex optimization problem can be expressed as: minimize , subject to and . The generalized Lagrange function is introduced as:

where and are the Lagrangian multipliers. must be satisfied.

There is a lemma: if satisfies the primal constraint ,

Otherwise, the maximum of can be , which is related to the values of .

The primal optimization problem is equivalent to:

The dual problem is:

If both of the optimization solution of the primal problem and that of the dual problem exsit, we have the theorem of weak duality:

Proof:

If the primal optimization problem is solved through the dual optimization problem, we need make sure that $ d^* = p^* $, which is called the strong duality. If there exist a saddle point of ,

The saddle point satisfies the Karush-Kuhn-Tucker (KKT) conditions:

Note that, can be derived if is satisfied. The theorem is described as: If satisfy the KKT condition, it is also a solution to the primal and the dual problems.

Recall the optimization problem in the section Geometrical margin, as:

s.t. , note that there are conditons.

The Lagrangian function is:

Thus, the primal optimization problem is equivalent to:

The corresponding dual problem is:

The can be solved by letting and .

Thus,

The training data points whose the weight are nonzero are called the support vectors. The optimal is a linear combination of a small number of data points. For a new data , we can compute:

where is classified as class 1 if and as class 2 otherwise. It means we make decisions by comparing each new example with only the support vectors.

The kernel method

The SVM optimization problem is rewritten as finding the maximum of:

s.t. and . Note that there are conditions. The data points only appear as inner product, not their explicit coordinates.

Typically in the original features, the data is not linearly-separable. We can replace by for some nonlinear , and the decision boundary is a nonlinear surface . For example, let , the features are expanded into three dimensional point as (x,y,xy) from two dimensional point (x,y).

Define the kernel function by:

The expanded SVM optimization problem is written as finding the maximum of:

s.t. and . For a new data , let’s see:

where is classified as class 1 if and as class 2 otherwise.

The kernel trick

The problem is, the feature expansion yield lots of features because it is high dimensional. The feature space is typically infinite-dimensional. For example, the polynomial of degree on original features yields expanded features. Thus the feature expansion could be inefficient and sometimes overfitting.

By using the kernel function mapping, we can operate in high-dimensional and implicit feature space only computing the inner products \phi(\vec{x_i})^T \phi(\vec{x_j}). This operation is often computationally cheaper than the explicit computation of . This approach is called the kernel trick, to make optimization efficient when there are lots of features.

- Linear kernel:

- Polynomial kernel:

- Radial basis kernel (RBK):

The kernel matrix

If we have the feature mapping for , the kernel is a matrix as:

where is a symmetric positive-semidefinite matrix (or non-negative definite: all of whose eigenvalues are nonnegative).

The necessary and sufficient condition of kernel, Mercer’s theorem: is a continuous symmetric non-negative definite kernel. The eigenvalues is nonnegative. This is also called the Mercer kernel.

Sequential minimal optimization (SMO) algorithm

Coordinate descent / ascend

Coordinate descent is used to deal with the unconstrained optimization problems. To find the minimum of a multivariable function, coordinate descent (ascend) is an optimization algorithm that successively minimizes (maximizes) along one coordinate directions while fixing all other coordinates. It may get stuck for a non-smooth multivariable function.

SMO

The expanded SVM optimization problem above is a constrained one.

Training a support vector machine requires the solution of a very large quadratic programming (QP) optimization problem. Sequential minimal optimization breaks this large QP problem into a series of smallest possible QP problems. These small QP problems are solved analytically, which avoids using a time-consuming numerical QP optimization as an inner loop. (John C. Platt, 1998)

The constraint involving the Lagrange multipliers , the smallest possible problem involves two such multipliers, and . The SMO algorithm is:

- Select one pair and and optimize the pair () while holding other multipliers fixed.

- Repeat till convergence.

- Solved when all the multipliers satisfy the KKT conditions. Heuristics are used to choose the pair of multipliers so as to accelerate the rate of convergence, since there are choices for ().

where is the negative of the sum over the rest of terms in the equality constraint, which is fixed in each iteration.

The SMO algorithm can be considered a special case of the Osuna algorithm, where the size of the optimization is two and both Lagrange multipliers are replaced at every step with new multipliers that are chosen via good heuristics.